Performance Test - Photo Size#2

Benchmark LeoFS v1.3.0¶

Purpose¶

We check the impact on performance when a node is killed (failed)

Issues:¶

Summary¶

- Loading Results with and without node being kiiled are similar

- During read, the received throughput from client is stable

- Before the first node is killed, the effective data set is small enough to sit in memory (page cache)

- After nodes are killed, nodes are receiving more requests

Next Action¶

- Similar to pervious cases, need a closer look on loading phase

Environment¶

- OS: Ubuntu Server 14.04.3

- Erlang/OTP: 17.5

- LeoFS: 1.3.0

- CPU: Intel Xeon E5-2630 v3 @ 2.40GHz

- HDD (node[36~50]) : 4x ST2000LM003 (2TB 5400rpm 32MB) RAID-0 are mounted at

/data/, Ext4 - SSD (node[36~50]) : 1x Crucial CT500BX100SSD1 mounted at

/ssd/, Ext4

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | [System Confiuration] -----------------------------------+---------- Item | Value -----------------------------------+---------- Basic/Consistency level -----------------------------------+---------- system version | 1.3.0 cluster Id | leofs_1 DC Id | dc_1 Total replicas | 3 number of successes of R | 1 number of successes of W | 2 number of successes of D | 2 number of rack-awareness replicas | 0 ring size | 2^128 -----------------------------------+---------- Multi DC replication settings -----------------------------------+---------- max number of joinable DCs | 2 number of replicas a DC | 1 -----------------------------------+---------- Manager RING hash -----------------------------------+---------- current ring-hash | 4adb34e4 previous ring-hash | 4adb34e4 -----------------------------------+---------- [State of Node(s)] -------+------------------------+--------------+----------------+----------------+---------------------------- type | node | state | current ring | prev ring | updated at -------+------------------------+--------------+----------------+----------------+---------------------------- S | S1@192.168.100.37 | stop | | | 2016-10-07 10:38:06 +0900 S | S2@192.168.100.38 | stop | | | 2016-10-07 10:43:18 +0900 S | S3@192.168.100.39 | running | 4adb34e4 | 4adb34e4 | 2016-10-07 09:52:34 +0900 S | S4@192.168.100.40 | running | 4adb34e4 | 4adb34e4 | 2016-10-07 09:52:34 +0900 S | S5@192.168.100.41 | running | 4adb34e4 | 4adb34e4 | 2016-10-07 09:52:34 +0900 G | G0@192.168.100.35 | running | 4adb34e4 | 4adb34e4 | 2016-10-07 09:52:37 +0900 -------+------------------------+--------------+----------------+----------------+---------------------------- |

-

basho-bench Configuration:

- Duration: 30 minutes

- Total number of concurrent processes: 64

- Total number of keys: 4000000

- Value size groups(byte):

- 4096.. 8192: 15%

- 8192.. 16384: 25%

- 16384.. 32768: 23%

- 32768.. 65536: 22%

- 65536.. 131072: 15%

- basho_bench driver: basho_bench_driver_leofs.erl

- Configuration file:

-

LeoFS Configuration:

- Manager_0: leo_manager_0.conf

- Gateway : leo_gateway.conf

- Disk Cache: 0

- Mem Cache: 0

- Storage : leo_storage.conf

- Container Path: /ssd/avs

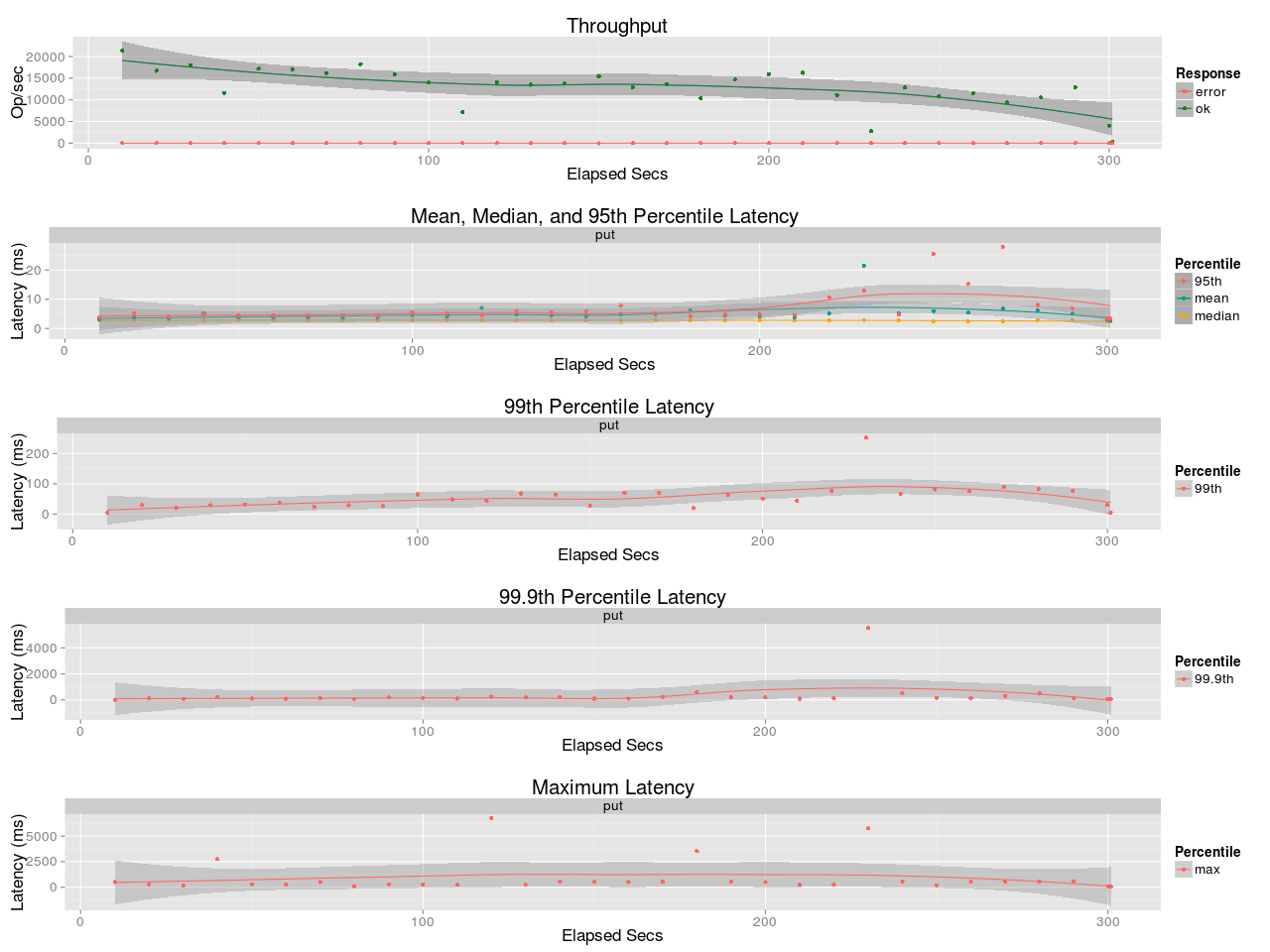

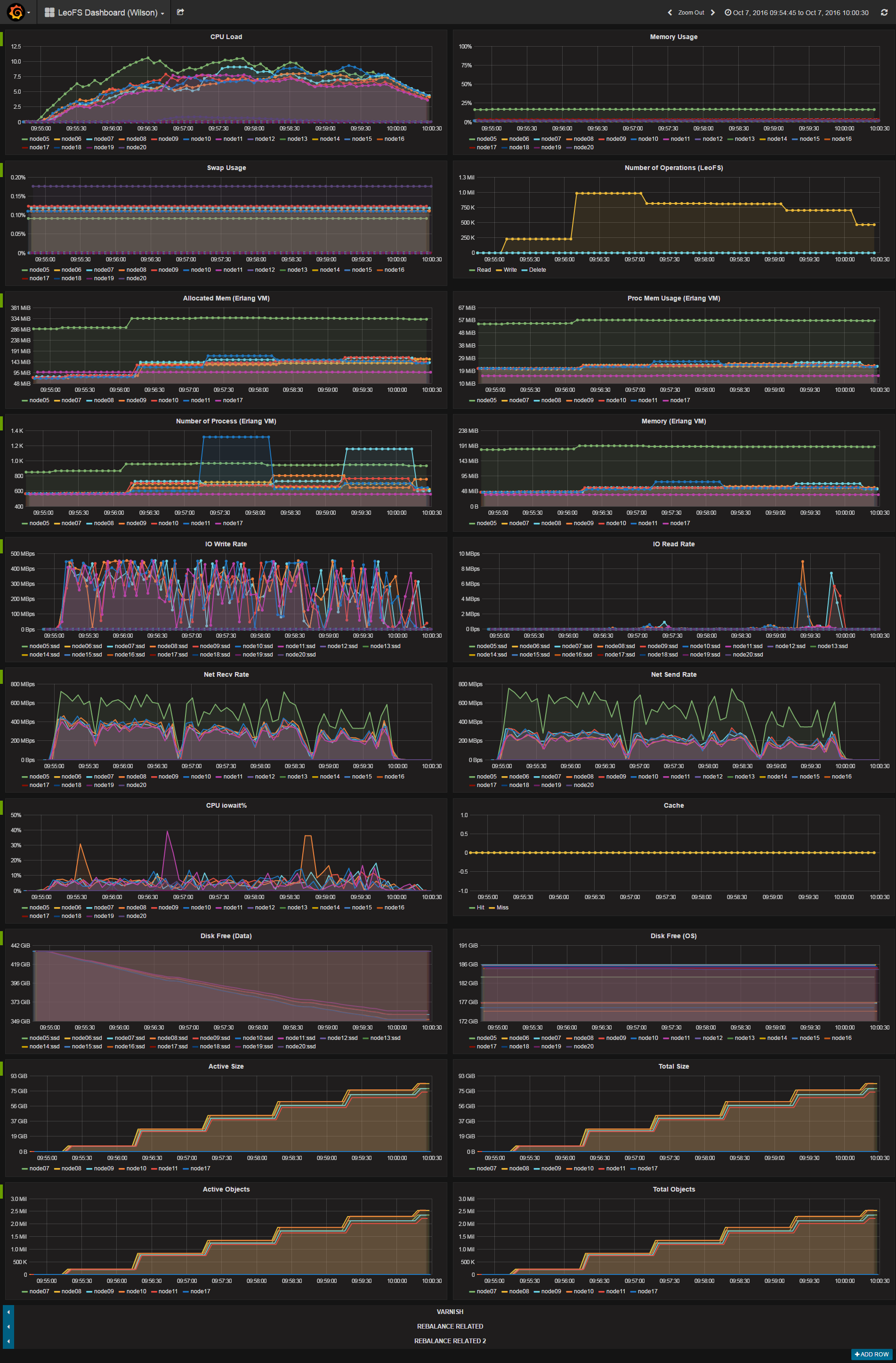

Results:¶

-

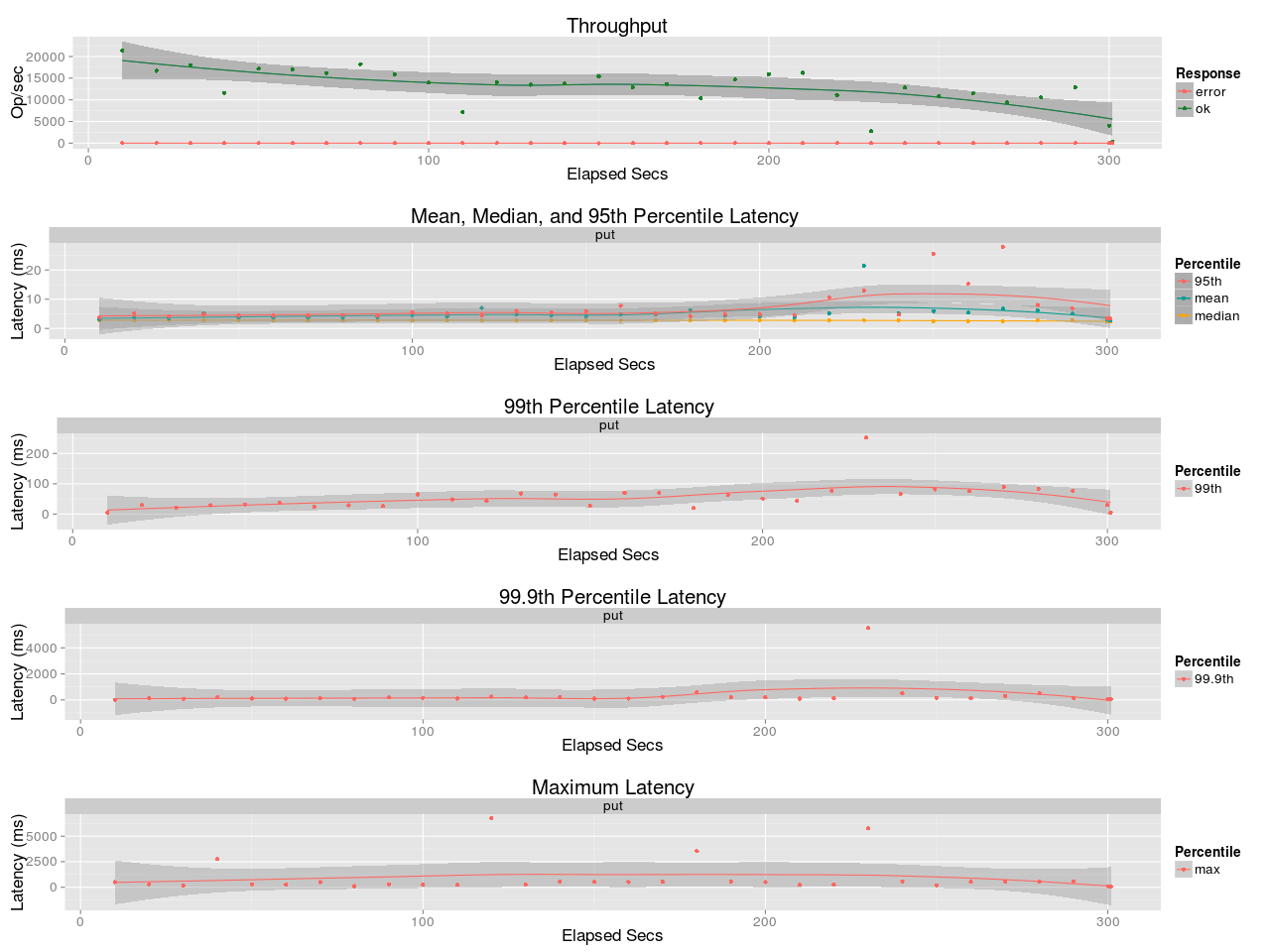

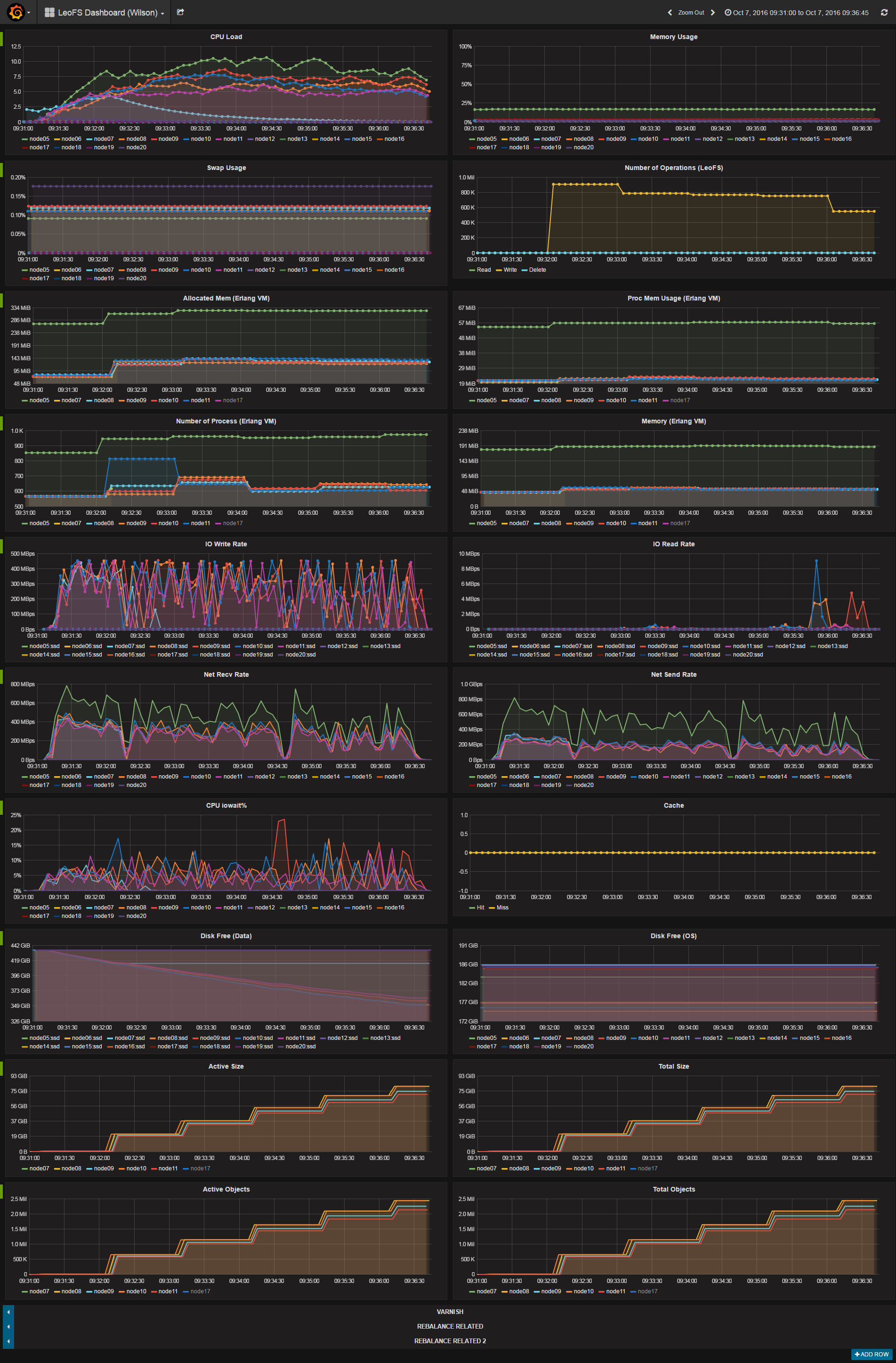

Loading (Kill node07 after 1 minute)

-

Loading

-

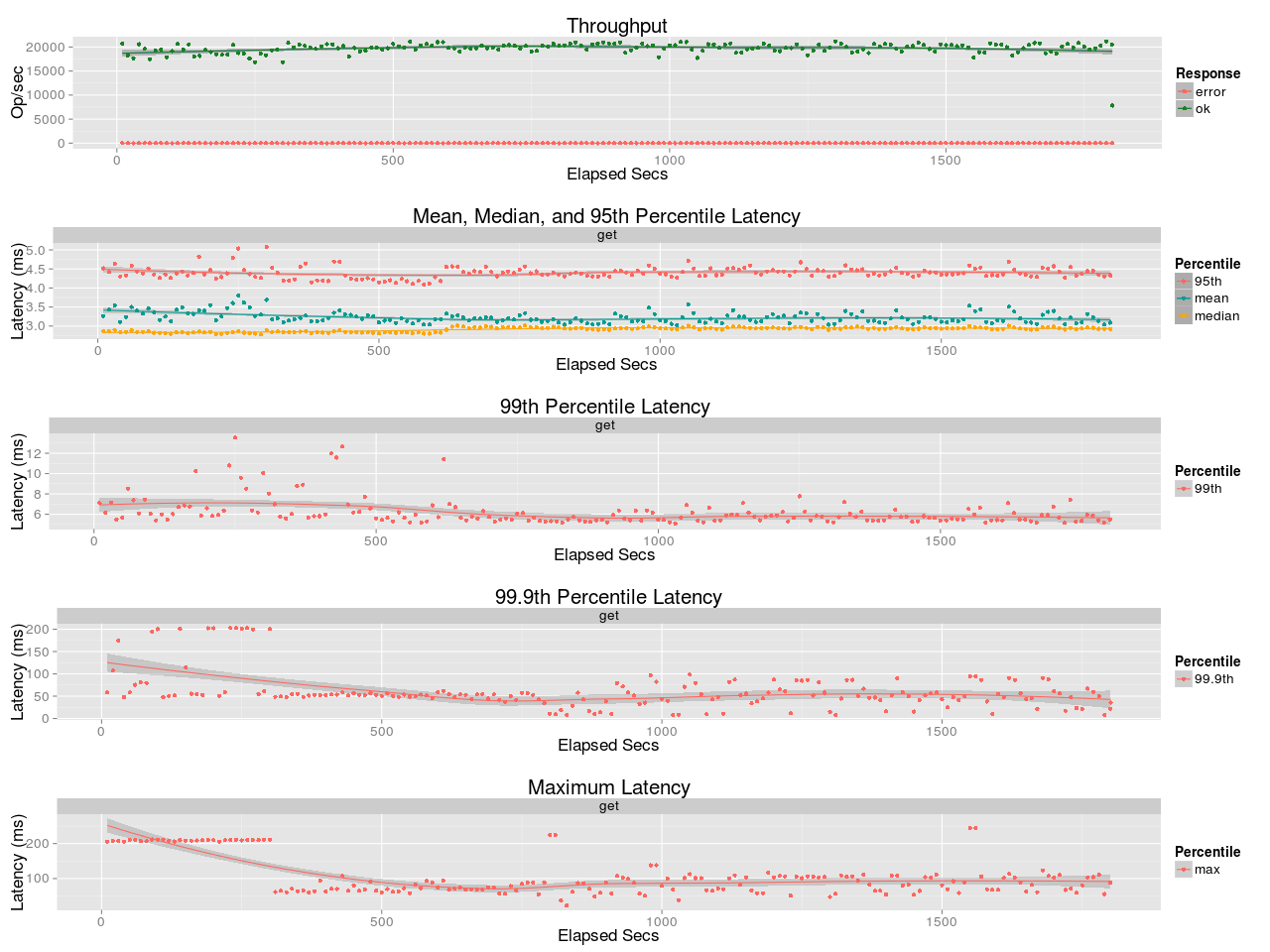

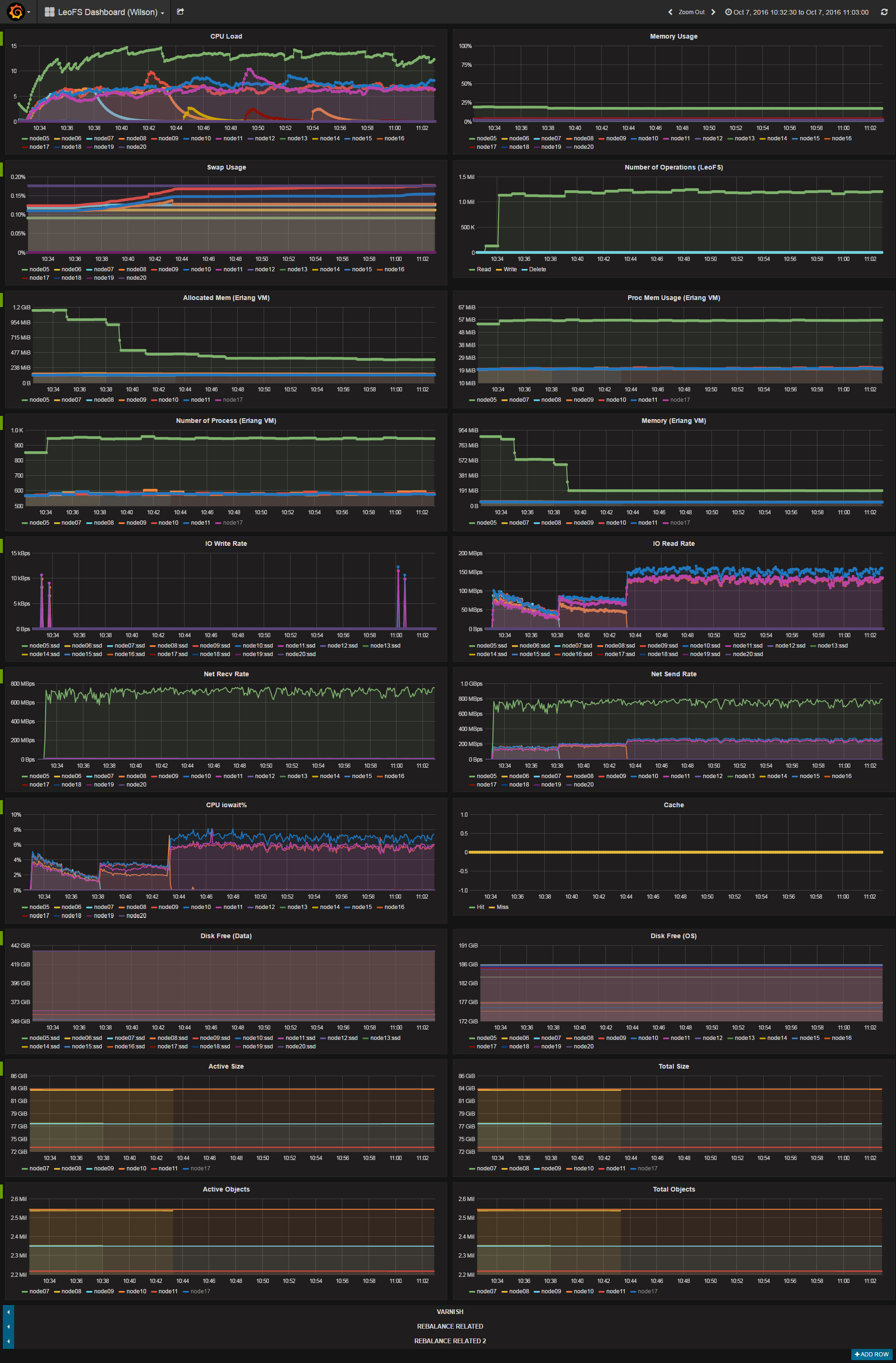

Reading (Kill node07 after 5 miuntes, node08 after 10 minutes)