Overload Test

Benchmark LeoFS v1.3.1-dev¶

Purpose¶

We check the performance of LeoFS when the disk is overloaded

Summary¶

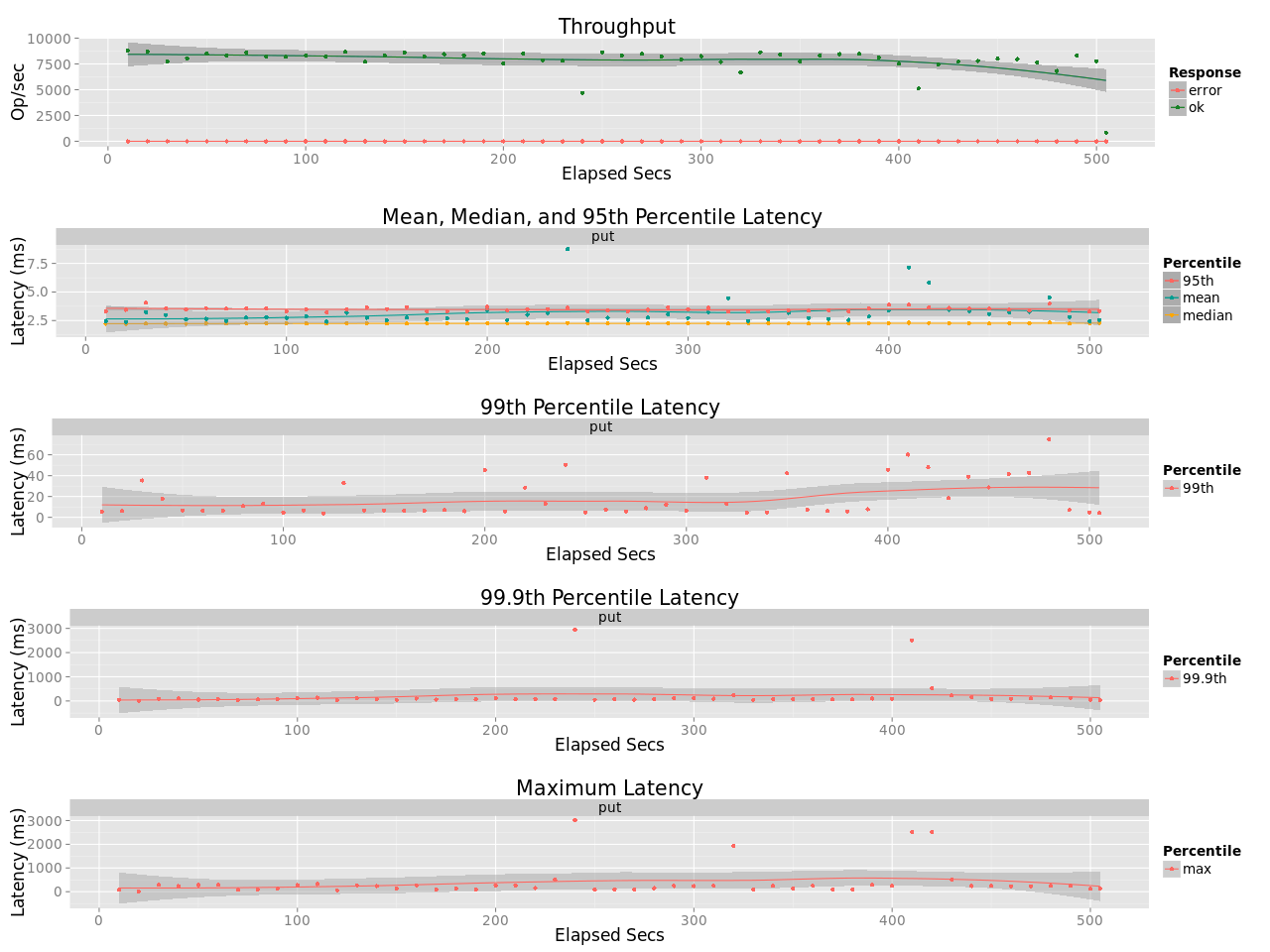

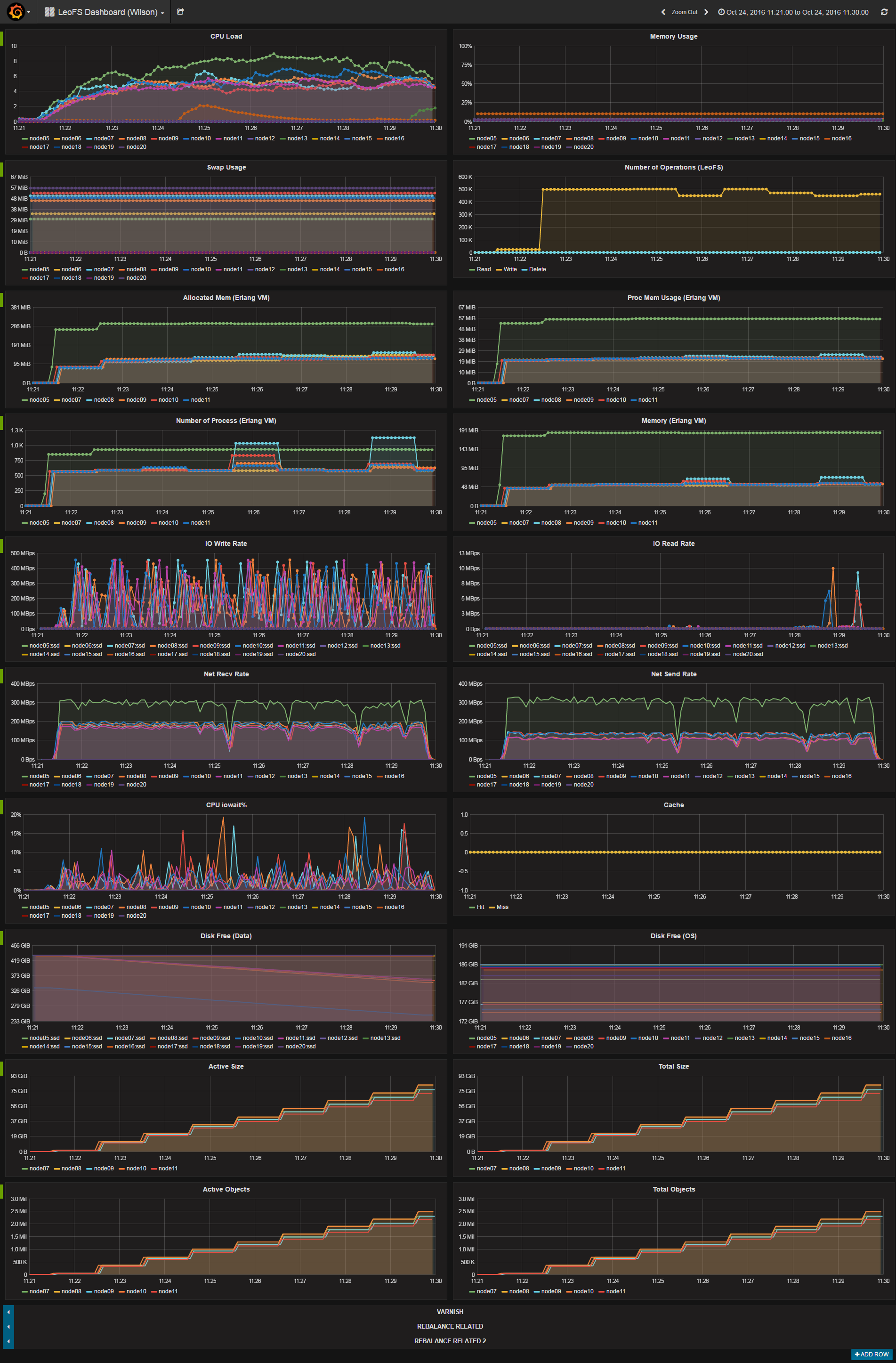

When the disk (SSD) is overloaded, there are some slow operations with latency in the order of seconds. LeoFS would appera to be stalled.

Methodology¶

- Load Images at a constent rate

- Overload the disk (SSD) of one storage node (S4@node10)

$ dd if=/dev/zero of=/ssd/testfile bs=16M count=6400

Environment¶

- OS: Ubuntu Server 14.04.3

- Erlang/OTP: 17.5

- LeoFS: 1.3.1-dev

- CPU: Intel Xeon E5-2630 v3 @ 2.40GHz

- HDD (node[36~50]) : 4x ST2000LM003 (2TB 5400rpm 32MB) RAID-0 are mounted at

/data/, Ext4 - SSD (node[36~50]) : 1x Crucial CT500BX100SSD1 mounted at

/ssd/, Ext4

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | [System Confiuration] -----------------------------------+---------- Item | Value -----------------------------------+---------- Basic/Consistency level -----------------------------------+---------- system version | 1.3.0 cluster Id | leofs_1 DC Id | dc_1 Total replicas | 3 number of successes of R | 1 number of successes of W | 2 number of successes of D | 2 number of rack-awareness replicas | 0 ring size | 2^128 -----------------------------------+---------- Multi DC replication settings -----------------------------------+---------- max number of joinable DCs | 2 number of replicas a DC | 1 -----------------------------------+---------- Manager RING hash -----------------------------------+---------- current ring-hash | 4adb34e4 previous ring-hash | 4adb34e4 -----------------------------------+---------- [State of Node(s)] -------+------------------------+--------------+----------------+----------------+---------------------------- type | node | state | current ring | prev ring | updated at -------+------------------------+--------------+----------------+----------------+---------------------------- S | S1@192.168.100.37 | running | 4adb34e4 | 4adb34e4 | 2016-10-24 10:18:56 +0900 S | S2@192.168.100.38 | running | 4adb34e4 | 4adb34e4 | 2016-10-24 10:18:56 +0900 S | S3@192.168.100.39 | running | 4adb34e4 | 4adb34e4 | 2016-10-24 10:18:56 +0900 S | S4@192.168.100.40 | running | 4adb34e4 | 4adb34e4 | 2016-10-24 10:18:56 +0900 S | S5@192.168.100.41 | running | 4adb34e4 | 4adb34e4 | 2016-10-24 10:18:56 +0900 G | G0@192.168.100.35 | running | 4adb34e4 | 4adb34e4 | 2016-10-24 10:18:59 +0900 -------+------------------------+--------------+----------------+----------------+---------------------------- |

-

basho-bench Configuration:

- Duration: 30 minutes

- Total number of concurrent processes: 64

- Total number of keys: 4000000

- Rate: 200ops

- Value size groups(byte):

- 4096.. 8192: 15%

- 8192.. 16384: 25%

- 16384.. 32768: 23%

- 32768.. 65536: 22%

- 65536.. 131072: 15%

- basho_bench driver: basho_bench_driver_leofs.erl

- Configuration file:

-

LeoFS Configuration:

- Manager_0: leo_manager_0.conf

- Gateway : leo_gateway.conf

- Disk Cache: 0

- Mem Cache: 0

- Storage : leo_storage.conf

- Container Path: /ssd/avs

Results:¶

-

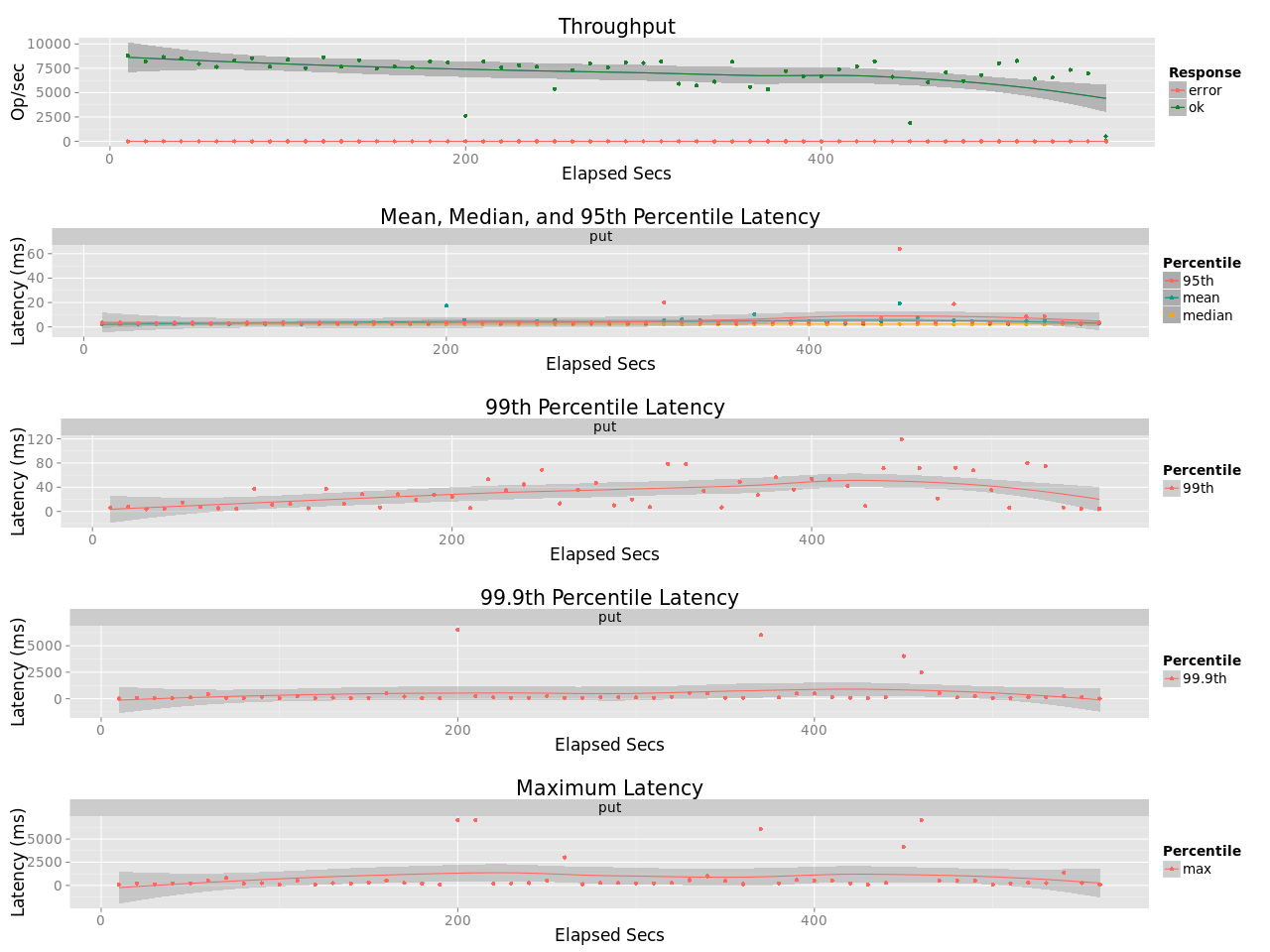

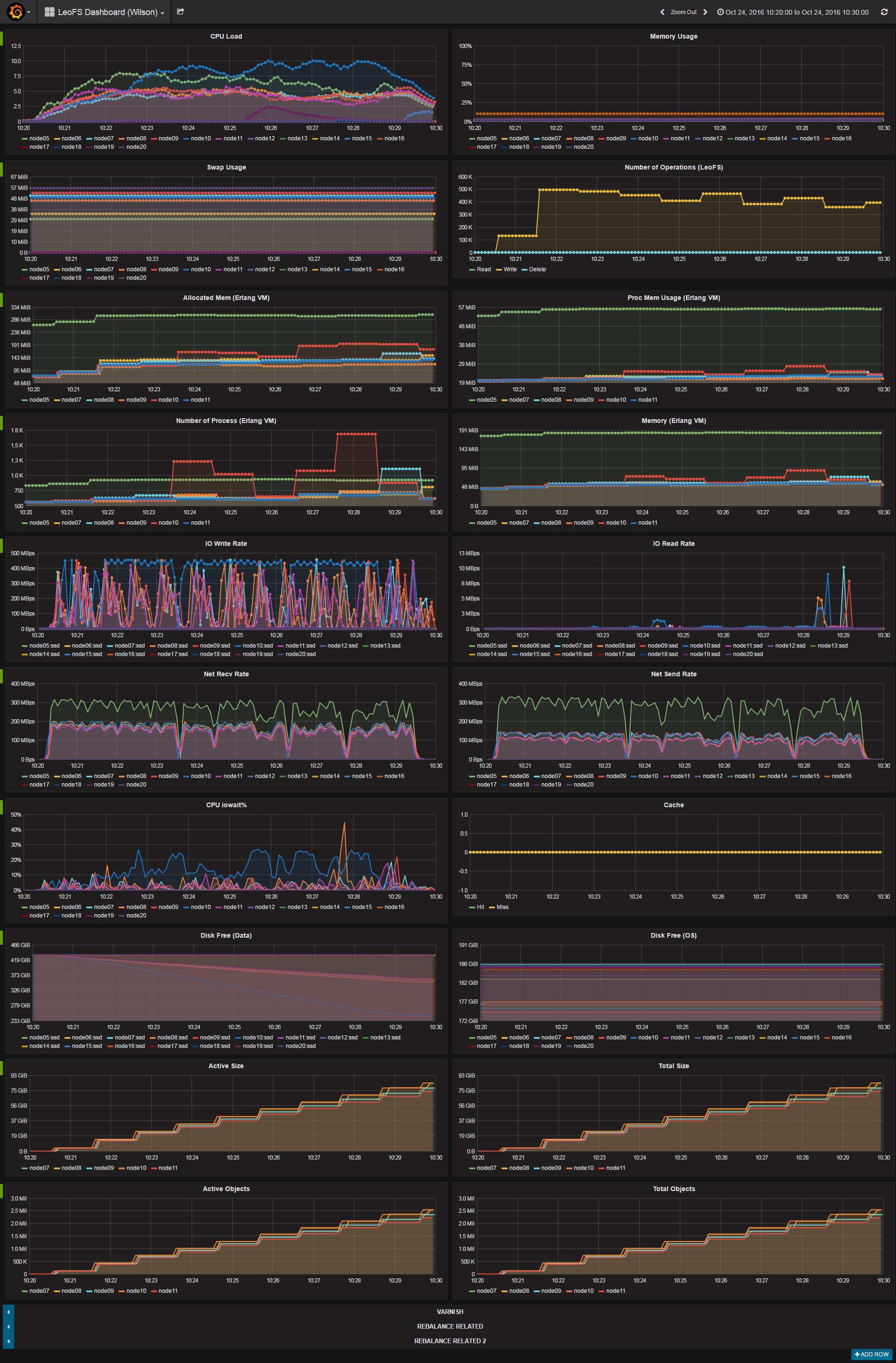

Normal

-

Overload